Tesla Dojo Supercomputer: “Magnitude Advantage” Over Competitors, Says Expert

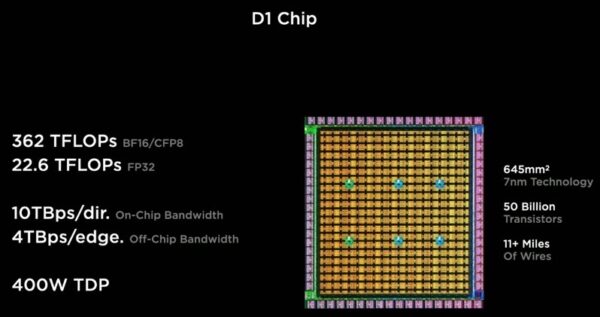

Following Tesla’s release of its Dojo Supercomputer D1 Chip on Thursday, computer hardware analyst Dylan Patel has shared thoughts on the company’s new supercomputing chip, which is expected to be operational as soon as next year according to Tesla.

The report released on Friday on Patel’s blog SemiAnalysis breaks down Tesla’s D1 Chip, including the importance of its GPU cluster neural networks, which have been expanding over the years, according to Patel.

This resulted in Tesla focusing on scaling up bandwidth and retaining low latency when designing the chip’s huge architecture, which is how it can deliver such impressive bandwidth and 10KW of total power consumption.

In addition to the D1 Chips themselves, Tesla detailed at the AI Day event how the chips could also be arranged into cabinets, scaling up to thousands of chips for servers and the like, and providing incredible amounts of power.

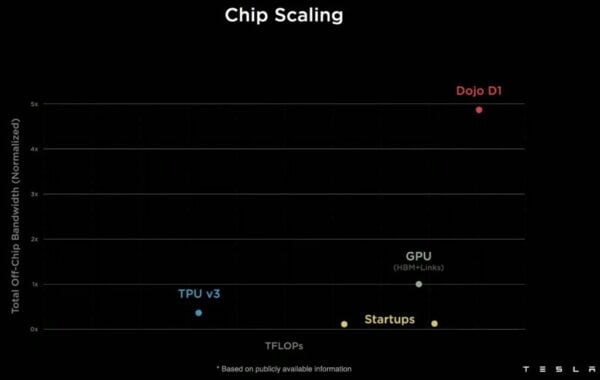

In the post, Patel writes, “cost equivalent versus Nvidia GPU, Tesla claims they can achieve 4x the performance, 1.3x higher performance per watt, and 5x smaller footprint.” Patel continues, “Tesla has a TCO advantage that is nearly an order magnitude better than an Nvidia AI solution. If their claims are true, Tesla has 1 upped everyone in the AI hardware and software field.”

Tesla says the D1 Chip is the world’s fastest AI training supercomputer, which could have major implications for the future of automation self-driving hardware. Despite a few skepticisms expressed by Patel, the overall outlook and implications for this chip are super exciting.

“If their claims are true, Tesla has 1 upped everyone in the AI hardware and software field. I’m skeptical, but this is also a hardware geek’s wet dream. SemiAnalysis is trying to calm down and tell ourselves to wait and see when it is actually deployed in production,” concluded Patel.