Tesla Unveils ‘D1’ Chip, Powers the World’s ‘Fastest AI Training Computer’

During Tesla’s 2021 AI Day, the company finally revealed details about its Dojo supercomputer.

The company said it was essentially “building a synthetic animal from the ground up”, when it comes to its vehicles.

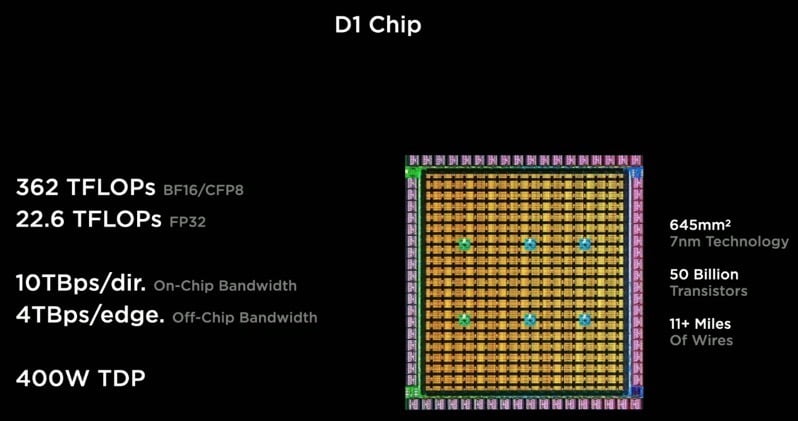

Ganesh Venkataramanan, Tesla’s Senior Director of Autopilot Hardware, was the one on stage to unveil the company’s in-house D1 Chip, which powers the company’s Dojo supercomputer.

Venkataramanan is the lead of Project Dojo, responsible for silicon, systems and firmware/software. Prior to his current 5.5 years at Tesla, he was a longtime engineering director at AMD, for nearly 15 years.

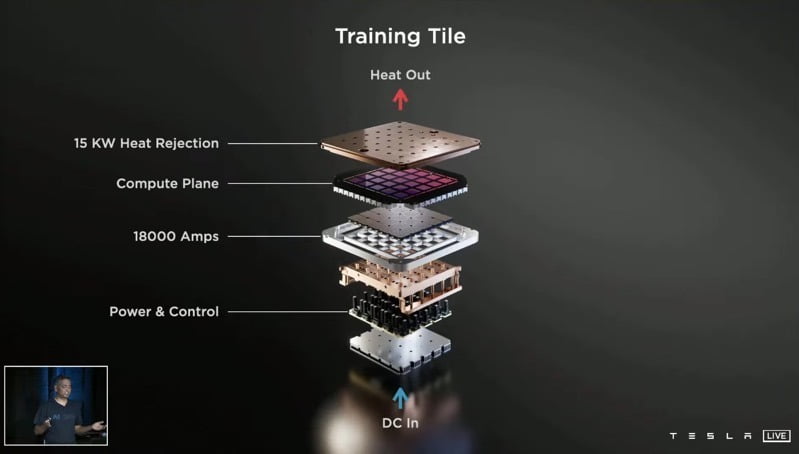

Based on a 7-nanometer manufacturing process, the D1 chip has a processing power of 362 teraflops. Tesla creates what it calls a training tile, by connecting 25 of these D1 chips together.

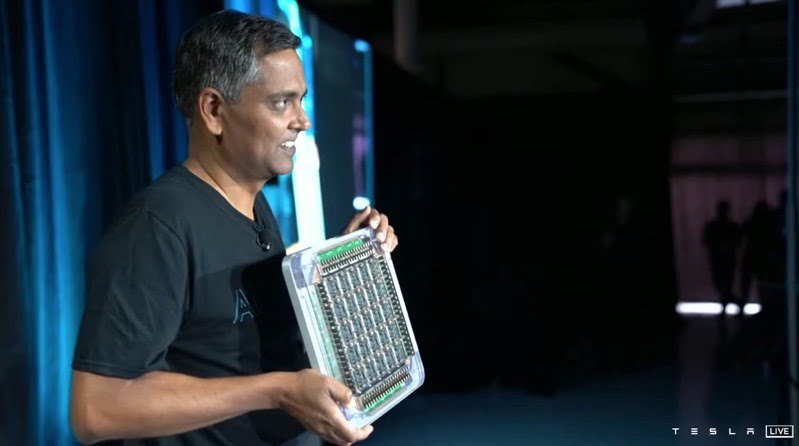

Venkataramanan proudly showed on stage what this D1-powered training tile looks like, showing it’s actually real. From here, Tesla puts 120 of these training tiles into numerous server cabinets, to allow for 1.1 exaflops of computing power, with Venkataramanan saying, “We will quickly assemble the first cabinet.”

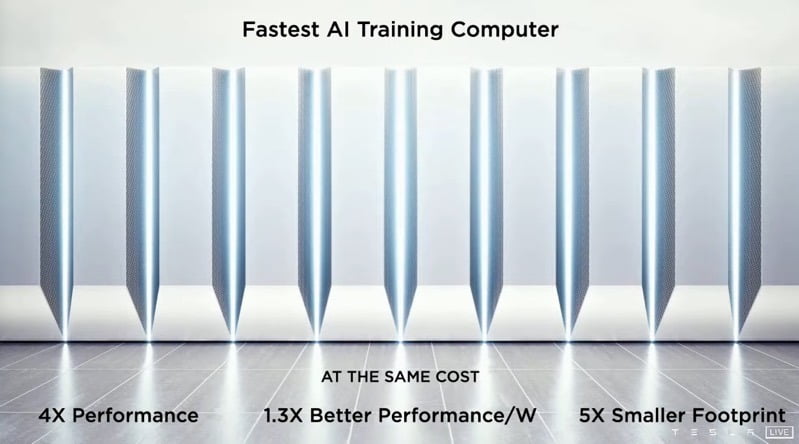

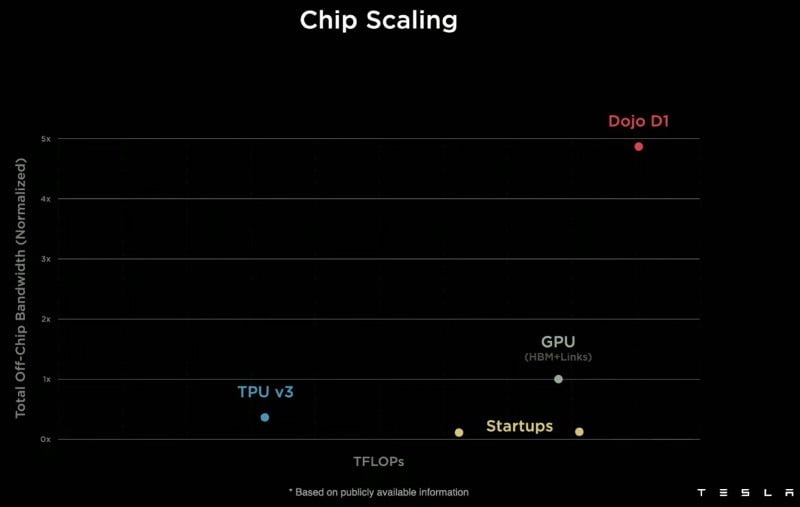

According to Tesla, with the D1 chip, the company will have the world’s fastest AI training computer, at the same cost as existing setups but with 4x performance, 1.3x better performance by weight and also 5x smaller footprint.

Tesla said it would be assembling its first cabinets “pretty soon”. Musk said the company “should have Dojo operational next year.”

These chips train models based on video data collected by Tesla vehicles, to make Autopilot and Full Self-Driving software smarter and better over time.

Tesla designing its own in-house silicon reminds us of Apple and its A-series chips for iPhone and iPad devices, and M-series chips for Mac computers and the new iPad Pro lineup. Tesla continues to work on its vertical integration, allowing the company to rely on itself and less on outside suppliers, which can help cause delays in production and deliveries.

It’s a quintillion (10^18) floating point operations per second – enough to simulate a human brain

— Elon Musk (@elonmusk) August 20, 2021